Math 0-1: Probability For Data Science & Machine Learning

Math 0-1: Probability For Data Science & Machine Learning

Published 9/2024

MP4 | Video: h264, 1920x1080 | Audio: AAC, 44.1 KHz

Language: English | Size: 12.73 GB | Duration: 17h 30m

A Casual Guide for Artificial Intelligence, Deep Learning, and Python Programmers

What you'll learn

Conditional probability, Independence, and Bayes' Rule

Use of Venn diagrams and probability trees to visualize probability problems

Discrete random variables and distributions: Bernoulli, categorical, binomial, geometric, Poisson

Continuous random variables and distributions: uniform, exponential, normal (Gaussian), Laplace, Gamma, Beta

Cumulative distribution functions (CDFs), probability mass functions (PMFs), probability density functions (PDFs)

Joint, marginal, and conditional distributions

Multivariate distributions, random vectors

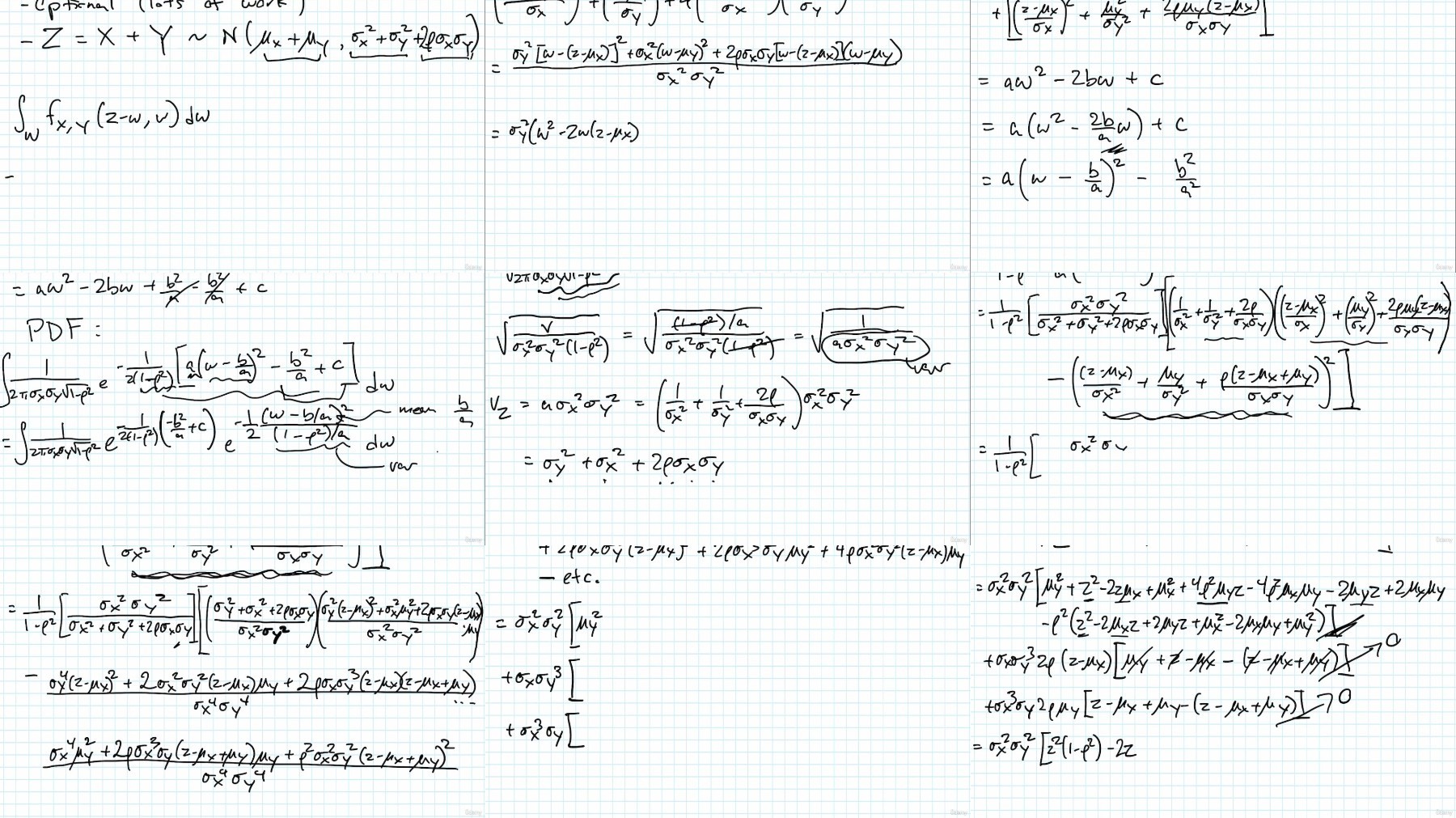

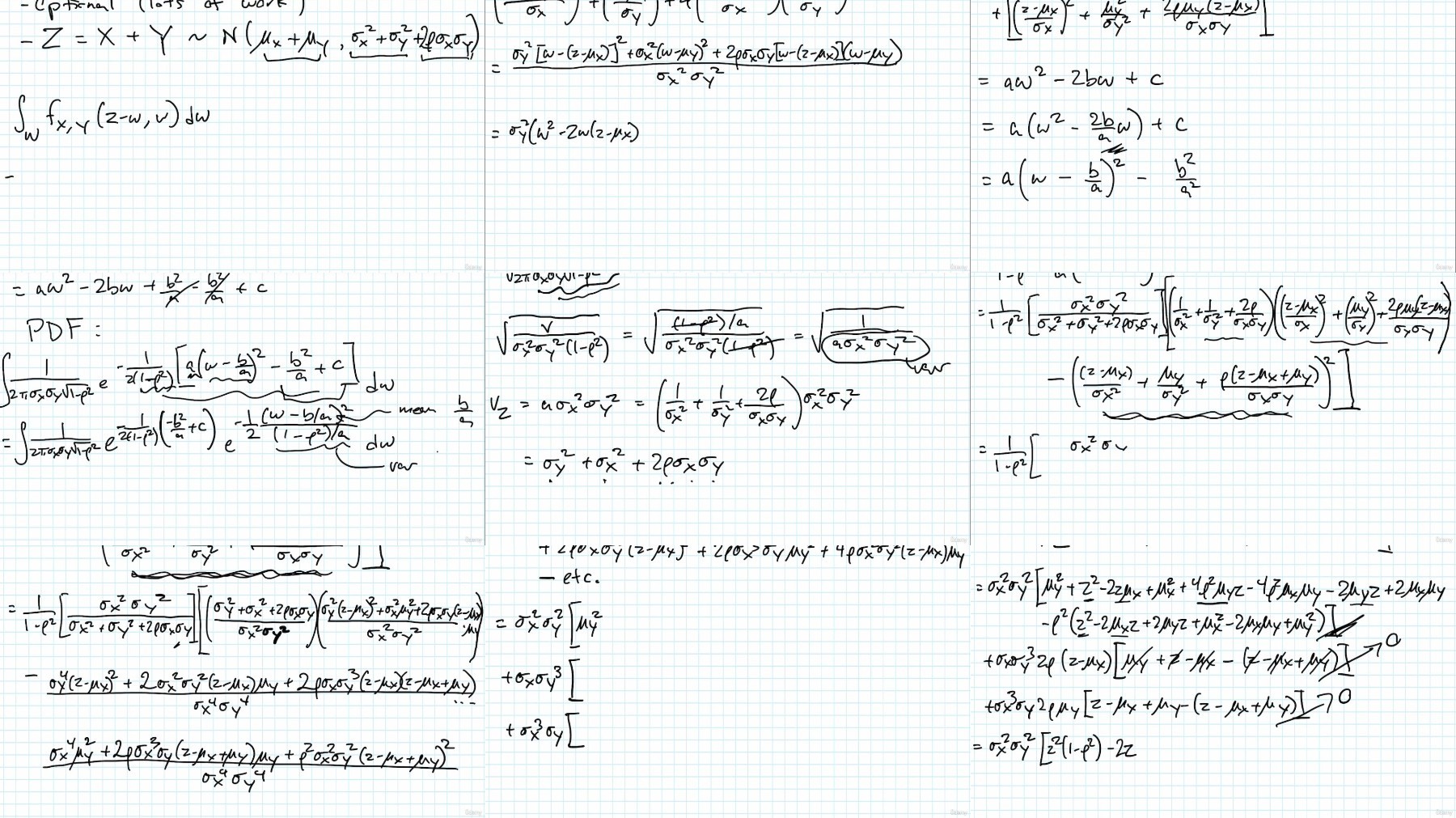

Functions of random variables, sums of random variables, convolution

Expected values, expectation, mean, and variance

Skewness, kurtosis, and moments

Covariance and correlation, covariance matrix, correlation matrix

Moment generating functions (MGF) and characteristic functions

Key inequalities like Markov, Chebyshev, Cauchy-Schwartz, Jensen

Convergence in probability, convergence in distribution, almost sure convergence

Law of large numbers and the Central Limit Theorem (CLT)

Applications of probability in machine learning, data science, and reinforcement learning

Requirements

College / University-level Calculus (for most parts of the course)

College / University-level Linear Algebra (for some parts of the course)

Description

Common scenario: You try to get into machine learning and data science, but there's SO MUCH MATH.Either you never studied this math, or you studied it so long ago you've forgotten it all.What do you do?Well my friends, that is why I created this course.Probability is one of the most important math prerequisites for data science and machine learning. It's required to understand essentially everything we do, from the latest LLMs like ChatGPT, to diffusion models like Stable Diffusion and Midjourney, to statistics (what I like to call "probability part 2").Markov chains, an important concept in probability, form the basis of popular models like the Hidden Markov Model (with applications in speech recognition, DNA analysis, and stock trading) and the Markov Decision Process or MDP (the basis for Reinforcement Learning).Machine learning (statistical learning) itself has a probabilistic foundation. Specific models, like Linear Regression, K-Means Clustering, Principal Components Analysis, and Neural Networks, all make use of probability.In short, probability cannot be avoided!If you want to do machine learning beyond just copying library code from blogs and tutorials, you must know probability.This course will cover everything that you'd learn (and maybe a bit more) in an undergraduate-level probability class. This includes random variables and random vectors, discrete and continuous probability distributions, functions of random variables, multivariate distributions, expectation, generating functions, the law of large numbers, and the central limit theorem.Most important theorems will be derived from scratch. Don't worry, as long as you meet the prerequisites, they won't be difficult to understand. This will ensure you have the strongest foundation possible in this subject. No more memorizing "rules" only to apply them incorrectly / inappropriately in the future! This course will provide you with a deep understanding of probability so that you can apply it correctly and effectively in data science, machine learning, and beyond.Are you ready?Let's go!Suggested prerequisites:Differential calculus, integral calculus, and vector calculusLinear algebraGeneral comfort with university/collegelevel mathematics

Overview

Section 1: Welcome

Lecture 1 Introduction

Lecture 2 Outline

Lecture 3 How to Succeed in this Course

Section 2: Probability Basics

Lecture 4 What Is Probability?

Lecture 5 Wrong Definition of Probability (Common Mistake)

Lecture 6 Wrong Definition of Probability (Example)

Lecture 7 Probability Models

Lecture 8 Venn Diagrams

Lecture 9 Properties of Probability Models

Lecture 10 Union Example

Lecture 11 Law of Total Probability

Lecture 12 Conditional Probability

Lecture 13 Bayes' Rule

Lecture 14 Bayes' Rule Example

Lecture 15 Independence

Lecture 16 Mutual Independence Example

Lecture 17 Probability Tree Diagrams

Section 3: Random Variables and Probability Distributions

Lecture 18 What is a Random Variable?

Lecture 19 The Bernoulli Distribution

Lecture 20 The Categorical Distribution

Lecture 21 The Binomial Distribution

Lecture 22 The Geometric Distribution

Lecture 23 The Poisson Distribution

Section 4: Continuous Random Variables and Probability Density Functions

Lecture 24 Continuous Random Variables and Continuous Distributions

Lecture 25 Physics Analogy

Lecture 26 More About Continuous Distributions

Lecture 27 The Uniform Distribution

Lecture 28 The Exponential Distribution

Lecture 29 The Normal Distribution (Gaussian Distribution)

Lecture 30 The Laplace (Double Exponential) Distribution

Section 5: More About Probability Distributions and Random Variables

Lecture 31 Cumulative Distribution Function (CDF)

Lecture 32 Exercise: CDF of Geometric Distribution

Lecture 33 CDFs for Continuous Random Variables

Lecture 34 Exercise: CDF of Normal Distribution

Lecture 35 Change of Variables (Functions of Random Variables) pt 1

Lecture 36 Change of Variables (Functions of Random Variables) pt 2

Lecture 37 Joint and Marginal Distributions pt 1

Lecture 38 Joint and Marginal Distributions pt 2

Lecture 39 Exercise: Marginal of Bivariate Normal

Lecture 40 Conditional Distributions and Bayes' Rule

Lecture 41 Independence

Lecture 42 Exercise: Bivariate Normal with Zero Correlation

Lecture 43 Multivariate Distributions and Random Vectors

Lecture 44 Multivariate Normal Distribution / Vector Gaussian

Lecture 45 Multinomial Distribution

Lecture 46 Exercise: MVN to Bivariate Normal

Lecture 47 Exercise: Multivariate Normal, Zero Correlation Implies Independence

Lecture 48 Multidimensional Change of Variables (Discrete)

Lecture 49 Multidimensional Change of Variables (Continuous)

Lecture 50 Convolution From Adding Random Variables

Lecture 51 Exercise: Sums of Jointly Normal Random Variables (Optional)

Section 6: Expectation and Expected Values

Lecture 52 Expected Value and Mean

Lecture 53 Properties of the Expected Value

Lecture 54 Variance

Lecture 55 Exercise: Mean and Variance of Bernoulli

Lecture 56 Exercise: Mean and Variance of Poisson

Lecture 57 Exercise: Mean and Variance of Normal

Lecture 58 Exercise: Mean and Variance of Exponential

Lecture 59 Moments, Skewness and Kurtosis

Lecture 60 Exercise: Kurtosis of Normal Distribution

Lecture 61 Covariance and Correlation

Lecture 62 Exercise: Covariance and Correlation of Bivariate Normal

Lecture 63 Exercise: Zero Correlation Does Not Imply Independence

Lecture 64 Exercise: Correlation Measures Linear Relationships

Lecture 65 Conditional Expectation pt 1

Lecture 66 Conditional Expectation pt 2

Lecture 67 Law of Total Expectation

Lecture 68 Exercise: Linear Combination of Normals

Lecture 69 Exercise: Mean and Variance of Weighted Sums

Section 7: Generating Functions

Lecture 70 Moment Generating Functions (MGF)

Lecture 71 Exercise: MGF of Exponential

Lecture 72 Exercise: MGF of Normal

Lecture 73 Characteristic Functions

Lecture 74 Exercise: MGF Doesn't Exist

Lecture 75 Exercise: Characteristic Function of Normal

Lecture 76 Sums of Independent Random Variables

Lecture 77 Exercise: Distribution of Sum of Poisson Random Variables

Lecture 78 Exercise: Distribution of Sum of Geometric Random Variables

Lecture 79 Moment Generating Functions for Random Vectors

Lecture 80 Characteristic Functions for Random Vectors

Lecture 81 Exercise: Weighted Sums of Normals

Section 8: Inequalities

Lecture 82 Monotonicity

Lecture 83 Markov Inequality

Lecture 84 Chebyshev Inequality

Lecture 85 Cauchy-Schwartz Inequality

Section 9: Limit Theorems

Lecture 86 Convergence In Probability

Lecture 87 Weak Law of Large Numbers

Lecture 88 Convergence With Probability 1 (Almost Sure Convergence)

Lecture 89 Strong Law of Large Numbers

Lecture 90 Application: Frequentist Perspective Revisited

Lecture 91 Convergence In Distribution

Lecture 92 Central Limit Theorem

Section 10: Advanced and Other Topics

Lecture 93 The Gamma Distribution

Lecture 94 The Beta Distribution

Python developers and software developers curious about Data Science,Professionals interested in Machine Learning and Data Science but haven't studied college-level math,Students interested in ML and AI but find they can't keep up with the math,Former STEM students who want to brush up on probability before learning about artificial intelligence

https://ddownload.com/mugan9g1wzq7/Udemy_Math_0_1_Probability_for_Data_Science_Machine_Learning_2024-9.part1.rar

https://ddownload.com/5vlkmi9mk24b/Udemy_Math_0_1_Probability_for_Data_Science_Machine_Learning_2024-9.part2.rar

https://ddownload.com/ajd69sz9rhov/Udemy_Math_0_1_Probability_for_Data_Science_Machine_Learning_2024-9.part3.rar

https://ddownload.com/h1wvzfck24qe/Udemy_Math_0_1_Probability_for_Data_Science_Machine_Learning_2024-9.part4.rar

https://rapidgator.net/file/e81d1dc8d1384a94f20a4e1db618a3bb/Udemy_Math_0_1_Probability_for_Data_Science_Machine_Learning_2024-9.part1.rar

https://rapidgator.net/file/fab4e543ac97b5cd845784f6a8418c31/Udemy_Math_0_1_Probability_for_Data_Science_Machine_Learning_2024-9.part2.rar

https://rapidgator.net/file/b11a32ad766af5db3e693b8c2a459e9a/Udemy_Math_0_1_Probability_for_Data_Science_Machine_Learning_2024-9.part3.rar

https://rapidgator.net/file/1824bbb2d16c5bc63378aa2bd4cf8865/Udemy_Math_0_1_Probability_for_Data_Science_Machine_Learning_2024-9.part4.rar

https://turbobit.net/yp6w58avnxa7/Udemy_Math_0_1_Probability_for_Data_Science_Machine_Learning_2024-9.part1.rar.html

https://turbobit.net/jkdnicsh5kdg/Udemy_Math_0_1_Probability_for_Data_Science_Machine_Learning_2024-9.part2.rar.html

https://turbobit.net/48tvu4chbkl2/Udemy_Math_0_1_Probability_for_Data_Science_Machine_Learning_2024-9.part3.rar.html

https://turbobit.net/70bfj1l4poa3/Udemy_Math_0_1_Probability_for_Data_Science_Machine_Learning_2024-9.part4.rar.html

What you'll learn

Conditional probability, Independence, and Bayes' Rule

Use of Venn diagrams and probability trees to visualize probability problems

Discrete random variables and distributions: Bernoulli, categorical, binomial, geometric, Poisson

Continuous random variables and distributions: uniform, exponential, normal (Gaussian), Laplace, Gamma, Beta

Cumulative distribution functions (CDFs), probability mass functions (PMFs), probability density functions (PDFs)

Joint, marginal, and conditional distributions

Multivariate distributions, random vectors

Functions of random variables, sums of random variables, convolution

Expected values, expectation, mean, and variance

Skewness, kurtosis, and moments

Covariance and correlation, covariance matrix, correlation matrix

Moment generating functions (MGF) and characteristic functions

Key inequalities like Markov, Chebyshev, Cauchy-Schwartz, Jensen

Convergence in probability, convergence in distribution, almost sure convergence

Law of large numbers and the Central Limit Theorem (CLT)

Applications of probability in machine learning, data science, and reinforcement learning

Requirements

College / University-level Calculus (for most parts of the course)

College / University-level Linear Algebra (for some parts of the course)

Description

Common scenario: You try to get into machine learning and data science, but there's SO MUCH MATH.Either you never studied this math, or you studied it so long ago you've forgotten it all.What do you do?Well my friends, that is why I created this course.Probability is one of the most important math prerequisites for data science and machine learning. It's required to understand essentially everything we do, from the latest LLMs like ChatGPT, to diffusion models like Stable Diffusion and Midjourney, to statistics (what I like to call "probability part 2").Markov chains, an important concept in probability, form the basis of popular models like the Hidden Markov Model (with applications in speech recognition, DNA analysis, and stock trading) and the Markov Decision Process or MDP (the basis for Reinforcement Learning).Machine learning (statistical learning) itself has a probabilistic foundation. Specific models, like Linear Regression, K-Means Clustering, Principal Components Analysis, and Neural Networks, all make use of probability.In short, probability cannot be avoided!If you want to do machine learning beyond just copying library code from blogs and tutorials, you must know probability.This course will cover everything that you'd learn (and maybe a bit more) in an undergraduate-level probability class. This includes random variables and random vectors, discrete and continuous probability distributions, functions of random variables, multivariate distributions, expectation, generating functions, the law of large numbers, and the central limit theorem.Most important theorems will be derived from scratch. Don't worry, as long as you meet the prerequisites, they won't be difficult to understand. This will ensure you have the strongest foundation possible in this subject. No more memorizing "rules" only to apply them incorrectly / inappropriately in the future! This course will provide you with a deep understanding of probability so that you can apply it correctly and effectively in data science, machine learning, and beyond.Are you ready?Let's go!Suggested prerequisites:Differential calculus, integral calculus, and vector calculusLinear algebraGeneral comfort with university/collegelevel mathematics

Overview

Section 1: Welcome

Lecture 1 Introduction

Lecture 2 Outline

Lecture 3 How to Succeed in this Course

Section 2: Probability Basics

Lecture 4 What Is Probability?

Lecture 5 Wrong Definition of Probability (Common Mistake)

Lecture 6 Wrong Definition of Probability (Example)

Lecture 7 Probability Models

Lecture 8 Venn Diagrams

Lecture 9 Properties of Probability Models

Lecture 10 Union Example

Lecture 11 Law of Total Probability

Lecture 12 Conditional Probability

Lecture 13 Bayes' Rule

Lecture 14 Bayes' Rule Example

Lecture 15 Independence

Lecture 16 Mutual Independence Example

Lecture 17 Probability Tree Diagrams

Section 3: Random Variables and Probability Distributions

Lecture 18 What is a Random Variable?

Lecture 19 The Bernoulli Distribution

Lecture 20 The Categorical Distribution

Lecture 21 The Binomial Distribution

Lecture 22 The Geometric Distribution

Lecture 23 The Poisson Distribution

Section 4: Continuous Random Variables and Probability Density Functions

Lecture 24 Continuous Random Variables and Continuous Distributions

Lecture 25 Physics Analogy

Lecture 26 More About Continuous Distributions

Lecture 27 The Uniform Distribution

Lecture 28 The Exponential Distribution

Lecture 29 The Normal Distribution (Gaussian Distribution)

Lecture 30 The Laplace (Double Exponential) Distribution

Section 5: More About Probability Distributions and Random Variables

Lecture 31 Cumulative Distribution Function (CDF)

Lecture 32 Exercise: CDF of Geometric Distribution

Lecture 33 CDFs for Continuous Random Variables

Lecture 34 Exercise: CDF of Normal Distribution

Lecture 35 Change of Variables (Functions of Random Variables) pt 1

Lecture 36 Change of Variables (Functions of Random Variables) pt 2

Lecture 37 Joint and Marginal Distributions pt 1

Lecture 38 Joint and Marginal Distributions pt 2

Lecture 39 Exercise: Marginal of Bivariate Normal

Lecture 40 Conditional Distributions and Bayes' Rule

Lecture 41 Independence

Lecture 42 Exercise: Bivariate Normal with Zero Correlation

Lecture 43 Multivariate Distributions and Random Vectors

Lecture 44 Multivariate Normal Distribution / Vector Gaussian

Lecture 45 Multinomial Distribution

Lecture 46 Exercise: MVN to Bivariate Normal

Lecture 47 Exercise: Multivariate Normal, Zero Correlation Implies Independence

Lecture 48 Multidimensional Change of Variables (Discrete)

Lecture 49 Multidimensional Change of Variables (Continuous)

Lecture 50 Convolution From Adding Random Variables

Lecture 51 Exercise: Sums of Jointly Normal Random Variables (Optional)

Section 6: Expectation and Expected Values

Lecture 52 Expected Value and Mean

Lecture 53 Properties of the Expected Value

Lecture 54 Variance

Lecture 55 Exercise: Mean and Variance of Bernoulli

Lecture 56 Exercise: Mean and Variance of Poisson

Lecture 57 Exercise: Mean and Variance of Normal

Lecture 58 Exercise: Mean and Variance of Exponential

Lecture 59 Moments, Skewness and Kurtosis

Lecture 60 Exercise: Kurtosis of Normal Distribution

Lecture 61 Covariance and Correlation

Lecture 62 Exercise: Covariance and Correlation of Bivariate Normal

Lecture 63 Exercise: Zero Correlation Does Not Imply Independence

Lecture 64 Exercise: Correlation Measures Linear Relationships

Lecture 65 Conditional Expectation pt 1

Lecture 66 Conditional Expectation pt 2

Lecture 67 Law of Total Expectation

Lecture 68 Exercise: Linear Combination of Normals

Lecture 69 Exercise: Mean and Variance of Weighted Sums

Section 7: Generating Functions

Lecture 70 Moment Generating Functions (MGF)

Lecture 71 Exercise: MGF of Exponential

Lecture 72 Exercise: MGF of Normal

Lecture 73 Characteristic Functions

Lecture 74 Exercise: MGF Doesn't Exist

Lecture 75 Exercise: Characteristic Function of Normal

Lecture 76 Sums of Independent Random Variables

Lecture 77 Exercise: Distribution of Sum of Poisson Random Variables

Lecture 78 Exercise: Distribution of Sum of Geometric Random Variables

Lecture 79 Moment Generating Functions for Random Vectors

Lecture 80 Characteristic Functions for Random Vectors

Lecture 81 Exercise: Weighted Sums of Normals

Section 8: Inequalities

Lecture 82 Monotonicity

Lecture 83 Markov Inequality

Lecture 84 Chebyshev Inequality

Lecture 85 Cauchy-Schwartz Inequality

Section 9: Limit Theorems

Lecture 86 Convergence In Probability

Lecture 87 Weak Law of Large Numbers

Lecture 88 Convergence With Probability 1 (Almost Sure Convergence)

Lecture 89 Strong Law of Large Numbers

Lecture 90 Application: Frequentist Perspective Revisited

Lecture 91 Convergence In Distribution

Lecture 92 Central Limit Theorem

Section 10: Advanced and Other Topics

Lecture 93 The Gamma Distribution

Lecture 94 The Beta Distribution

Python developers and software developers curious about Data Science,Professionals interested in Machine Learning and Data Science but haven't studied college-level math,Students interested in ML and AI but find they can't keep up with the math,Former STEM students who want to brush up on probability before learning about artificial intelligence

https://ddownload.com/mugan9g1wzq7/Udemy_Math_0_1_Probability_for_Data_Science_Machine_Learning_2024-9.part1.rar

https://ddownload.com/5vlkmi9mk24b/Udemy_Math_0_1_Probability_for_Data_Science_Machine_Learning_2024-9.part2.rar

https://ddownload.com/ajd69sz9rhov/Udemy_Math_0_1_Probability_for_Data_Science_Machine_Learning_2024-9.part3.rar

https://ddownload.com/h1wvzfck24qe/Udemy_Math_0_1_Probability_for_Data_Science_Machine_Learning_2024-9.part4.rar

https://rapidgator.net/file/e81d1dc8d1384a94f20a4e1db618a3bb/Udemy_Math_0_1_Probability_for_Data_Science_Machine_Learning_2024-9.part1.rar

https://rapidgator.net/file/fab4e543ac97b5cd845784f6a8418c31/Udemy_Math_0_1_Probability_for_Data_Science_Machine_Learning_2024-9.part2.rar

https://rapidgator.net/file/b11a32ad766af5db3e693b8c2a459e9a/Udemy_Math_0_1_Probability_for_Data_Science_Machine_Learning_2024-9.part3.rar

https://rapidgator.net/file/1824bbb2d16c5bc63378aa2bd4cf8865/Udemy_Math_0_1_Probability_for_Data_Science_Machine_Learning_2024-9.part4.rar

https://turbobit.net/yp6w58avnxa7/Udemy_Math_0_1_Probability_for_Data_Science_Machine_Learning_2024-9.part1.rar.html

https://turbobit.net/jkdnicsh5kdg/Udemy_Math_0_1_Probability_for_Data_Science_Machine_Learning_2024-9.part2.rar.html

https://turbobit.net/48tvu4chbkl2/Udemy_Math_0_1_Probability_for_Data_Science_Machine_Learning_2024-9.part3.rar.html

https://turbobit.net/70bfj1l4poa3/Udemy_Math_0_1_Probability_for_Data_Science_Machine_Learning_2024-9.part4.rar.html